Polycrises or Everything, Everywhere, All at Once

If you prefer to listen rather than read, this blog is available as a podcast here. Or if you want to listen to just this post:

There are people who are optimistic about the future. I am not one of them. (I do have religious faith, but that’s different.) I am open to the idea that I should be more optimistic, but that doesn’t seem supported by “facts on the ground” as they say.

Some might argue that I have a bias for ignoring the good facts and focusing on the bad ones. That’s certainly possible, but I have put forth considerable effort to expose myself to people making the case for optimism. Here are links to some of my reviews of Pinker, perhaps the most notable of our current modern optimists. Beyond Pinker I've read books by Fukuyama, Deutsch, Yglesias, Zeihan and Cowen, and while these authors might not have quite the optimism of Pinker, they nevertheless put forth optimistic arguments. Finally if any of you have recommendations for optimists I’ve missed, I promise I’ll read them. (Assuming I haven’t already. That list of authors is not exhaustive.)

After doing all this reading, why do I remain unconvinced and expect to remain that way, regardless of what else I end up coming across? To understand that we first have to understand their case for optimism. It generally rests on two pillars:

First, they emphasize the amazing progress we’ve made over the last few centuries and in particular over the last few decades. And indeed there has been enormous progress in things like violence, poverty, health, infant mortality, minority rights, etc. They assume, with some justification, that this progress will continue. Generally it is dicey to try predicting the future, but they have a pretty good reason for believing that this time it’s different. Through the tools of science and reason we have created a perpetual knowledge generation machine, and increasing knowledge leads to increasing progress. Or so their argument goes.

Second, they’ll examine the things we’re worried about and make the case that they’re not as bad as people think. That certain groups are incentivized, either because it attracts an audience or there’s money involved, or because of their individual biases to engage in fearmongering. Highlighting the most apocalyptic scenarios and data, while downplaying things that paint a more moderate picture. Pinker is famous, or infamous depending on your point of view, for his optimism about global warming. Which is not to say that he doesn’t think it’s a problem, merely that he believes the same tools of knowledge generation that solved, or mitigated, many of our past problems will be up to the task of mitigating, or outright solving the problem of climate change as well.

In both of these categories Pinker and the other’s make excellent and compelling points. And, on balance, they’re entirely correct. Despite the pandemic, despite the war in Ukraine, despite the opioid epidemic, and a lot of other things (many of them mentioned in this space) 2023 is just about the best time to be alive, ever. Notice I said “just about”. Life expectancy has actually been going down recently, and yes the pandemic played a big role in that, but it had been stagnant since 2010. Teen mental health has gotten worse. Murders are on the rise. This is a US-centric view, but outside of the US there’s the aforementioned war in Ukraine, but also famine is on the rise in much of Africa. With these statistics in mind it certainly seems possible that as great as 2023 is that 2010 was better.

Does this mean that we’ve peaked? That things are going to get steadily worse from here on out? Or are we on something of a plateau, waiting for the next big breakthrough. Perhaps we’re on the cusp of commercializing fusion power, or of widespread enhancements from genetic engineering, or perhaps the AI singularity. 2023 does have ChatGPT which 2010 did not. Or are our current difficulties just noise? If they look back on things from the year 2500 will everything look like one smooth exponential curve? This last possibility is basically what Pinker and the others say is happening, though some are less bullish than Pinker, and some, like Deutsch, are more bullish.

And, to be clear, on this first point, which is largely focused on human capacity, they may be right. I’m familiar with the seemingly insoluble manure crisis of the late 1800s. And how it suddenly was a complete non-issue once the automobile came along. Still, evidence continues to mount that things are slowing down, that civilization has plateaued. That science, the great engine powering all of our advances, is producing fewer great and disruptive inventions, and resistance to innovation is increasing. If it is, that would mark the big difference between now and the late 1800’s. Back then science still had a lot of juice. Now? That’s questionable. And we might be lucky if it turns out to just be a plateau, the odds that we’re actually going backwards are higher than they’ll admit. But this isn’t the primary focus of this post. I’m more interested in their second claim, that the bad things people are worried about aren’t all that bad.

In general, albeit in a limited fashion, I also agree with this point. I think if you take any individual problem and sample public opinion, that you will find a bias towards the apocalyptic. One that isn’t supported by the data. As an example many, many people believe that global warming is an extinction level event. It’s not. Of course this assumes that people know about the problem in the first place. There’s a lot of ignorance out there, but it’s human nature that those who are worried about a problem, likely worry more than is warranted. And Pinker, et al. are correct to point it out. That’s not the problem, the problem arises from two other sources.

First, and here I am using Pinker’s book Enlightenment Now as my primary example, there’s an unwarranted assumption of comprehensiveness. In the book, Pinker goes through everything from nuclear war, to AI Risk, to global warming, and several more subjects besides. And when it’s over you’re left with the implication of: “See you don’t need to worry about the future, I’ve comprehensively shown how all of the potential catastrophes are overblown. You can proceed with optimism!” If you’ve been reading my posts closely you may have noticed that on occasion (see for instance the book review in my last post of A Poison Like No Other) I will point out that the potential catastrophe I’ve been discussing is not one covered by Pinker.

Of course, if Pinker just missed a couple of relatively unimportant problems then this oversight is probably no big deal. He covered the big threats, and the smaller threats will probably end up being resolved in a similar fashion. The problem is we don’t know how many threats he missed. Such is the nature of possible catastrophes, there’s not some cheat sheet where they’re all listed, in order of severity. Rather the list is constantly changing, catastrophes are added and subtracted (mostly added) and their potential severity is, at best, an educated guess. Some of them are going to be overblown, as Pinker correctly points out. Some are going to be underestimated, which might end up being the case with microplastics. I’m not sure how big of a problem it will eventually end up being, but given that it didn’t even make it into Pinker’s book, I suspect he’s too dismissive of it. But beyond those catastrophes where our estimate of the severity is off, there’s the most dangerous category of all. Catastrophes that take us completely by surprise. I would offer up social media as an example of a catastrophe in this last category.

As I said, the problems of the optimists arise from two sources. The first is the assumption of comprehensiveness, the second is the ignorance of connectedness. To illustrate this I’d like to go back to a post I wrote back in 2020 about Fermi’s Paradox.

At the time I was responding to a post by Scott Alexander who argued that we shouldn’t fear that the Great Filter is ahead of us. For those who need a refresher on what that means. Fermi’s Paradox is paradoxical because if the Earth is an average example of a planet, then there should be aliens everywhere, but they’re not. Where are they? Somewhere between the millions Earthlike planets out there, and becoming an interstellar civilization there must be a filter. And it must be a great filter because seemingly no one makes it past it. Perhaps the great filter is developing life in the first place. Perhaps it’s going from single celled, to multicellular life. Or perhaps it’s ahead of us. Perhaps it’s easy to get to the point of intelligent life, but then that intelligent life inevitably destroys itself in a nuclear holocaust. In his post Alexander lists four potential great filters which might lie ahead of us and demonstrates how each of them is probably not THE filter. I bring all of this up because it’s a great example of what I’ve been talking about.

First off he makes the same assumption of comprehensiveness I accused Pinker of making — listing four possibilities and then assuming that the issue is closed when there are dozens of potential future great filters. But it’s also an example of the second problem, the way the problems are connected. As I said at the time:

(Also, any technologically advanced civilization would probably have to deal with all these problems at the same time, i.e. if you can create nukes you’re probably close to creating an AI, or exhausting a single planet’s resources. Perhaps individually [none of them is that worrisome] but what about the combination of all of them?)

Yes, Pinker and Alexander may be correct that we don’t have to worry about nuclear war, AI risk, or global warming, when considered individually. But when we combine these elements we get a whole different set of risks. Sure, rather than armageddon there’s an argument to be made that nuclear weapons actually created the Long Peace through the threat of mutually assured destruction, but what happens to that if you add in millions of climate refugees? Does MAD continue to operate? Or, maybe climate refugees won’t materialize (though it seems like we’ve got a pretty bad refugee problem even without tacking the word climate onto things). Are smaller countries going to use AI to engage in asymmetric warfare because nukes are prohibitively expensive and easy to detect? Will this end up causing enough damage that those nations with nukes will retaliate. And then there’s of course the combination of all three things: Are small nations suffering from climatic shifts going to be incentivized to misuse new technology like AI and destabilize the balance created by nukes?

This is just three items which produces only six possible catastrophes. But our list of potential, individual catastrophes is probably in the triple digits by this point. Even if we just limit it to the top ten, that's 3.6 million potential combinatorial catastrophes.

Once you start to look for the way our problems combine, you see it everywhere:

Microplastics are an annoying pollutant all on their own, but there’s some evidence they contribute to infertility, which worsens the fertility crisis. They get ingested by marine life which heightens the problems of overfishing. Finally, they appear to inhibit plant growth, which makes potential food crises worse as well.

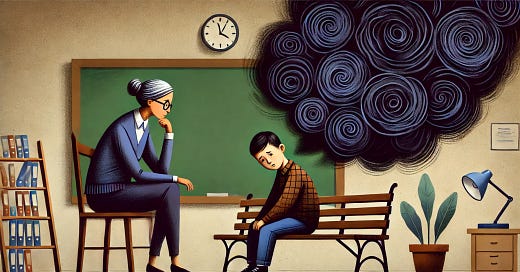

You may have seen something about the recent report released by the CDC saying that adolescent girls were reporting record rates of sadness, suicidal ideation, and sexual violence. Obviously social media has to be suspect #1 for this crisis. But I’m not sure it can be blamed for the increase in sexual violence. Isn’t the standard narrative that kids stay home on their phones rather than going out with friends. Don’t you have to be with people to suffer from sexual violence? I’d honestly be surprised if pornography didn’t play a role, but regardless this is definitely a case where two problems are interacting in bad ways.

If you believe that climate change is going to exacerbate natural disasters (and there’s evidence for and against that) then these disasters are coming at a particularly bad time. Lots of our infrastructure dates from the 50s and 60s and much of it from even before that. But because of the pensions crisis being suffered by municipalities. We don’t have the money to conduct even normal repairs, let alone repair the additional damage caused by disasters. And most projections indicate that both the disaster problem and the pension problem are just going to get a lot worse.

I’m not the only one who’s noticed this combinatorial effect. Search for polycrisis. There are all sorts of potential crises brewing, and most of the lists (see for example here) don’t even mention the first two sets of items on my list, and they only partially cover the third one.

You may think that one or more of the things I listed are not actually big deals. You may be right, but there are so many problems operating in so many combinations, that we can be wrong about a lot of them, and still have a situation where everything, everywhere is catastrophic all at once.

Pinker and the rest are absolutely correct about the human potential to do amazing good. But they have a tendency to overlook the human potential to cause amazing harm as well. In the past, before things were so interconnected, before our powers were so great. Just a few things had to go right for us to end up with the abundance we currently experience. But to stay where we are, nearly everything has to go right, and nothing, very much, can go wrong.

If you’re curious I did enjoy the Michelle Yeoh movie referenced in the title. I’d like to say that I got the idea for this post from the everything bagel doomsday device. But I didn’t. Still I like bagels, particularly with lox. If you’d like to buy me one, consider donating.