If you prefer to listen rather than read, this blog is available as a podcast here. Or if you want to listen to just this post:

As long time readers know I’m a big fan of Nassim Nicholas Taleb. Taleb is best known for his book The Black Swan, and the eponymous theory it puts forth regarding the singular importance of rare, high impact events. His second best known work/concept is Antifragile. And while these concepts come up a lot in both my thinking and my writing. It’s an idea buried in his last book, Skin in the Game, that my mind keeps coming back to. As I mentioned when I reviewed it, the mainstream press mostly dismissed it as being unequal to his previous books. As one example, the review in the Economist said that:

IN 2001 Nassim Taleb published “Fooled by Randomness”, an entertaining and provocative book on the misunderstood role of chance. He followed it with “The Black Swan”, which brought that term into widespread use to describe extreme, unexpected events. This was the first public incarnation of Mr Taleb—idiosyncratic and spiky, but with plenty of original things to say. As he became well-known, a second Mr Taleb emerged, a figure who indulged in bad-tempered spats with other thinkers. Unfortunately, judging by his latest book, this second Mr Taleb now predominates.

A list of the feuds and hobbyhorses he pursues in “Skin in the Game” would fill the rest of this review. (His targets include Steven Pinker, subject of the lead review.) The reader’s experience is rather like being trapped in a cab with a cantankerous and over-opinionated driver. At one point, Mr Taleb posits that people who use foul language on Twitter are signalling that they are “free” and “competent”. Another interpretation is that they resort to bullying to conceal the poverty of their arguments.

This mainstream dismissal is unfortunate because I believe this book contains an idea of equal importance to black swans and antifragility, but which hasn’t received nearly as much attention. An idea the modern world needs to absorb if we’re going to prevent bad things from happening.

To understand why I say this, let's take a step back. As I’ve repeatedly pointed out, technology has increased the number of bad things that can happen. To take the recent pandemic as an example, international travel allowed it to spread much faster than it otherwise would have, and made quarantine, that old standby method for stopping the spread of diseases, very difficult to implement. Also these days it’s entirely possible for technology to have created such a pandemic. Very few people are arguing that this is what happened, but the argument over whether technology added to the problem in the form of “gain of function” research, and a subsequent lab leak is still being hotly debated.

Given not only the increased risk of bad things brought on by modernity, but the risk of all possible bad things, people have sought to develop methods for managing this risk. For avoiding or minimizing the impact of these bad things. Unfortunately these methods have ended up largely being superficial attempts to measure the probability that something will happen. The best example of this is Superforecasting, where you make measurable predictions, assign confidence levels to those predictions and then you track how well you did. I’ve beaten up on Superforecasting a lot over the years, and it’s not my intent to beat up on it even more, or at least it’s not my primary intent. I bring it up now because it’s a great example of the superficiality of modern risk management. It’s focused on one small slice of preventing bad things from happening: improving our predictions on a very small slice of bad things. I think we need a much deeper understanding of how bad things happen.

Superforecasting is an example of a more shallow understanding of bad things. The process has several goals, but I think the two biggest are:

First, to increase the accuracy of the probabilities being assigned to the occurrence of various events and outcomes. There is a tendency among some to directly equate “risk” with this probability. Which leads to statements like, “The risk of nuclear war is 1% per year.” I would certainly argue that any study of risk goes well beyond probabilities, that what we’re really looking for is any and all methods for preventing bad things from happening. And while understanding the odds of those events is a good start, it's only a start. And if not done carefully it can actually impair our preparedness.

The second big goal of superforecasting is to identify those people who are particularly talented at assigning such probabilities in order that you might take advantage of those talents going forward. This hopefully leads to a future with a better understanding of risk, and consequent reduction in the number of bad things that happen.

The key principle in all of this is our understanding of risk. When people end up equating risk with simply improving our assessment of the probability that an event will occur, they end up missing huge parts of that understanding. As I’ve pointed out in the past, their big oversight is the role of impact—some bad things are worse than others. But they are also missing a huge variety of other factors which contribute to our ability to avoid bad things, and this is where we get to the ideas from Skin in the Game.

To begin with, Taleb introduces two concepts: “ensemble probability” and “time probability”. To illustrate the difference between the two he uses the example of gambling in a casino. To understand ensemble probability you should imagine 100 people all gambling on the same day. Taleb asks, “How many of them go bust?” Assuming that they each have the same amount of initial money and make the same bets and taking into account standard casino probabilities, about 1% of people will end up completely out of money. So in a starting group of 100, one gambler will go completely bust. Let’s say this is gambler 28. Does the fact that gambler 28 went bust have any effect on the amount of money gambler 29 has left? No. The outcomes are completely independent. This is ensemble probability.

To understand time probability, imagine that instead of having 100 people gambling all on the same day, let’s have one person gamble 100 days in a row. If we use the same assumptions, then once again approximately 1% of the time the gambler will go bust, and be completely out of money. But on this occasion since it’s the same person once they go bust they’re done. If they go bust on day 28, then there is no day 29. This is time probability. And Taleb’s argument is that when experts (like superforecasters) talk about probability they generally treat things as ensembles, whereas reality mostly deals in time probability. They might also be labeled independent or dependent probabilities.

As Taleb is most interested in investing, the example he gives relates to individual investors, who are often given advice as if they have a completely diversified and independent portfolio where a dip in their emerging market holdings does not affect their silicon valley stocks. When in reality most individual investors exist in a situation where everything in their life is strongly linked and mostly not diversified. As an example, most of their net worth is probably in their home, a place with definite dependencies. So if 2007 comes along and their home tanks, not only might they be in danger of being on the street, it also might affect their job (say if they were in construction). Even if they do have stocks they may have to sell them off to pay the mortgage because having a place to live is far more important than maintaining their portfolio diversification. Or as Taleb describes it:

...no individual can get the same returns as the market unless he has infinite pockets...This is conflating ensemble probability and time probability. If the investor has to eventually reduce his exposure because of losses, or because of retirement, or because he got divorced to marry his neighbor’s wife, or because he suddenly developed a heroin addiction after his hospitalization for appendicitis, or because he changed his mind about life, his returns will be divorced from those of the market, period.

Most of the things Taleb lists there are black swans. For example one hopes that developing a heroin addiction would be a black swan for most people. In true ensemble probability black swans can largely be ignored. If you’re gambler 29, you don’t care if gambler 28 ends up addicted to gambling and permanently ruined. But in strict time probability any negative black swan which leads to ruin strictly dominates the entire sequence. If you’re knocked out of the game on day 28 then there is no day 29, or day 59 for that matter. It doesn’t matter how many other bad things you avoid, one bad thing, if bad enough destroys all your other efforts. Or as Taleb says, “in order to succeed, you must first survive.”

Of course most situations are on a continuum between time probability and ensemble probability. Even absent some kind of broader crisis, there’s probably a slightly higher chance of you going bust if your neighbor goes bust—perhaps you’ve lent them money, or in their desperation they sue you over some petty slight. If you’re in a situation where one company employs a significant percentage of the community, that chance goes up even more. The chance gets higher if your nation is in crisis and it gets even higher if there’s a global crisis. This finally takes us to Taleb’s truly big idea, or at least the idea I mentioned in the opening paragraph. The one my mind kept returning to since reading the book in 2018. He introduces the idea with an example:

Let us return to the notion of “tribe.” One of the defects modern education and thinking introduces is the illusion that each one of us is a single unit. In fact, I’ve sampled ninety people in seminars and asked them: “what’s the worst thing that can happen to you?” Eighty-eight people answered “my death.”

This can only be the worst-case situation for a psychopath. For after that, I asked those who deemed that their worst-case outcome was their own death: “Is your death plus that of your children, nephews, cousins, cat, dogs, parakeet, and hamster (if you have any of the above) worse than just your death?” Invariably, yes. “Is your death plus your children, nephews, cousins (...) plus all of humanity worse than just your death?” Yes, of course. Then how can your death be the worst possible outcome?

You can probably see where I’m going here, but before we get to that. In defense of the Economist review, the quote I just included has the following footnote:

Actually, I usually joke that my death plus someone I don’t like surviving, such as the journalistic professor Steven Pinker, is worse than just my death.

I have never argued that Taleb wasn’t cantankerous. And I think being cantankerous given the current state of the world is probably appropriate.

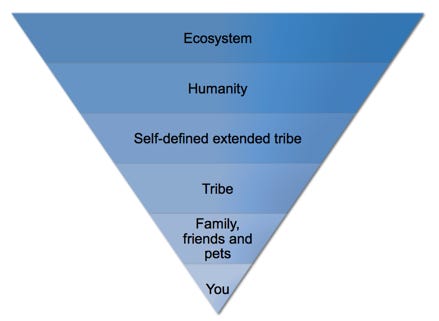

In any event, he follows up this discussion of asking people to name the worst thing that could happen to them with an illustration. The illustration is an inverted pyramid sliced into horizontal layers of increasing width as you rise from the tip of the pyramid to its “base”. The layers, from top to bottom are:

Ecosystem

Humanity

Self-defined extended tribe

Tribe

Family, friends, and pets

You

The higher up you are, the worse the risk. While no one likes to contemplate their own ruin, the ruin of all of their loved ones is even worse. And we should do everything in our power to ensure the survival of humanity and the ecosystem. Even if it means extreme risk to ourselves and our families (a point I’ll be returning to in a moment.) If we want to prevent really bad things from happening we need to focus less on risks to individuals and more on risks to everyone and everything.

By combining this inverted pyramid, with the concepts of time probability and ensemble probability we can start drawing some useful conclusions. To begin with not only are time probabilities more catastrophic at higher levels. They are more likely to be present at higher levels. A nation has a lot of interdependencies whereas an individual might have very few. To put it another way, if an individual dies, the consequences, while often tragic, are nevertheless well understood and straightforward to manage. There are entire industries devoted to smoothing out the way. While if a nation dies, it’s always calamitous with all manner of consequences which are poorly understood. And if all of humanity dies no mitigation is possible.

With that in mind, the next conclusion is that we should be trying to push risks down as low as possible—from the ecosystem to humanity, from humanity to nations, from nations to tribes, from tribes to families and from families to individuals. We are also forced to conclude that, where possible, we should make risks less interdependent. We should aim for ensemble probabilities rather than time probabilities.

All of this calls to mind the principle of subsidiarity or federalism and certainly there is a lot of overlap. But whereas subsidiarity is mostly about increasing efficiency, here I’m specifically focused on reducing harm. Of making negative black swans less catastrophic—of understanding and mitigating bad things.

Of course when you hear this idea that we should push risks from tribes to families or from nations to families you immediately recoil. And indeed the modern world has spent a lot of energy moving risk in exactly the opposite direction. Pushing risks up the scale, moving risk off of individuals and accumulating it in communities, states and nations. And sometimes placing the risk with all of humanity. It used to be that individuals threatened each other with guns, and that was a horrible situation with widespread violence, but now nations threaten each other with nukes. The only way that’s better is if the nukes never get used. So far we’ve been lucky, let’s really hope that luck continues.

Some, presumably including superforecasters, will argue that by moving risk up the scale it’s easy to quantify and manage, and thereby reduce. I have seen no evidence that these people understand risk at different scales, nor any evidence that they make any distinction between time probabilities and ensemble probabilities, but for the moment let’s grant that they’re correct that by moving risk up the scale we lessen it. That the risk that any individual will get shot, in say the Wild West, is 5% per year. But the risk that any nation will get nuked is only 1% per year. Yes, the risk has been reduced. One is less than five. But should that 1% chance come to pass (and given enough years it certainly will, i.e. it’s a time probability) then far more than 5% of people will die. We’ve prevented one variety of bad things by creating the possibility (albeit a smaller one) that a far worse event will happen.

The pandemic has provided an interesting test of these ideas, and I’ll be honest it also illustrates how hard it can be to apply these ideas to real situations. But there wouldn’t be much point to this discussion if we didn’t try.

First let’s consider the vaccine. I’ve long thought that vaccination is a straightforward example of antifragility. Of a system making gains from stress. Additionally it also seems pretty straightforward that this is an example of moving risk down the scale. Of moving risk from the community to the individual, and I know the modern world has taught us we should never have to do that, but as I’ve pointed out it’s a good thing. So vaccination is an example of moving risk down the inverted pyramid.

On the other hand the pandemic has given us examples of risk being moved up the scale. The starkest example is government spending, where we have spent enormous amounts of money to cushion individuals from the risk of unemployment and homelessness. Thereby moving the risk up to the level of the nation. We have certainly prevented a huge number of small bad things from happening, but have we increased the risk of a singular catastrophic event? I guess we’ll find out. Regardless it does seem to have moved things from an ensemble probability to a time probability. Perhaps this government intervention won’t blow up, but we can’t afford to have any of them blow up, because if intervention 28 blows up there is no intervention 29.

Of course the murky examples far outweigh the clear ones. Are mask mandates pushing things down to the level of the individual? Or is it better to not have a mandate? Thereby giving individuals the option of taking more risk because that’s the layer we want risk to operate at? And of course the current argument about vaccination is happening at the level of the state and community. Biden is pushing for a vaccination mandate on all companies that employ more than 100 people and the Texas governor just issued an executive order banning such a mandate. I agree it can be difficult to draw the line. But there is one final idea from Skin in the Game that might help.

Out of all of the foregoing Taleb comes up with a very specific definition of courage.

Courage is when you sacrifice your own well being for the sake of the survival of a layer higher than yours.

I do think the pandemic is a particularly complicated situation. But even here courage would have definitely helped. It would have allowed us to conduct human challenge trials, which would have shortened the vaccination approval process. It would have made the decision to reopen schools easier. And yes while it’s hard to imagine we wouldn’t have moved some risk up the scale, it would have kept us from moving all of it up the scale.

I understand this is a fraught topic, for most people the ideal is to have no bad things happen, ever. But that’s not possible. Bad things are going to happen, and the best way to keep them from being catastrophic things is more courage. Something I fear the modern world is only getting worse at.

I talk a lot about bad things. And you may be thinking why doesn’t he ever talk about good things? Well here’s something good, donating. I mean I guess it’s mostly just good for me, but what are you going to do?